Why More AI Startups Are Choosing Model Aggregators Over Single-Vendor APIs

In the fast-moving world of AI innovation, speed and agility are everything. Startups need to ship products fast, iterate fast, and work on tight budgets. But when you're wedded to a single AI API, that becomes much harder than it has to be.

In the past, startups selected one API provider, integrated its tools, and worked around its weaknesses. But now that there are so many more powerful AI models coming out—Claude, Mistral, Gemini, LLaMA, and so on—committing to just one no longer makes sense. Different models excel at doing different things, and picking only one limits what your application can do.

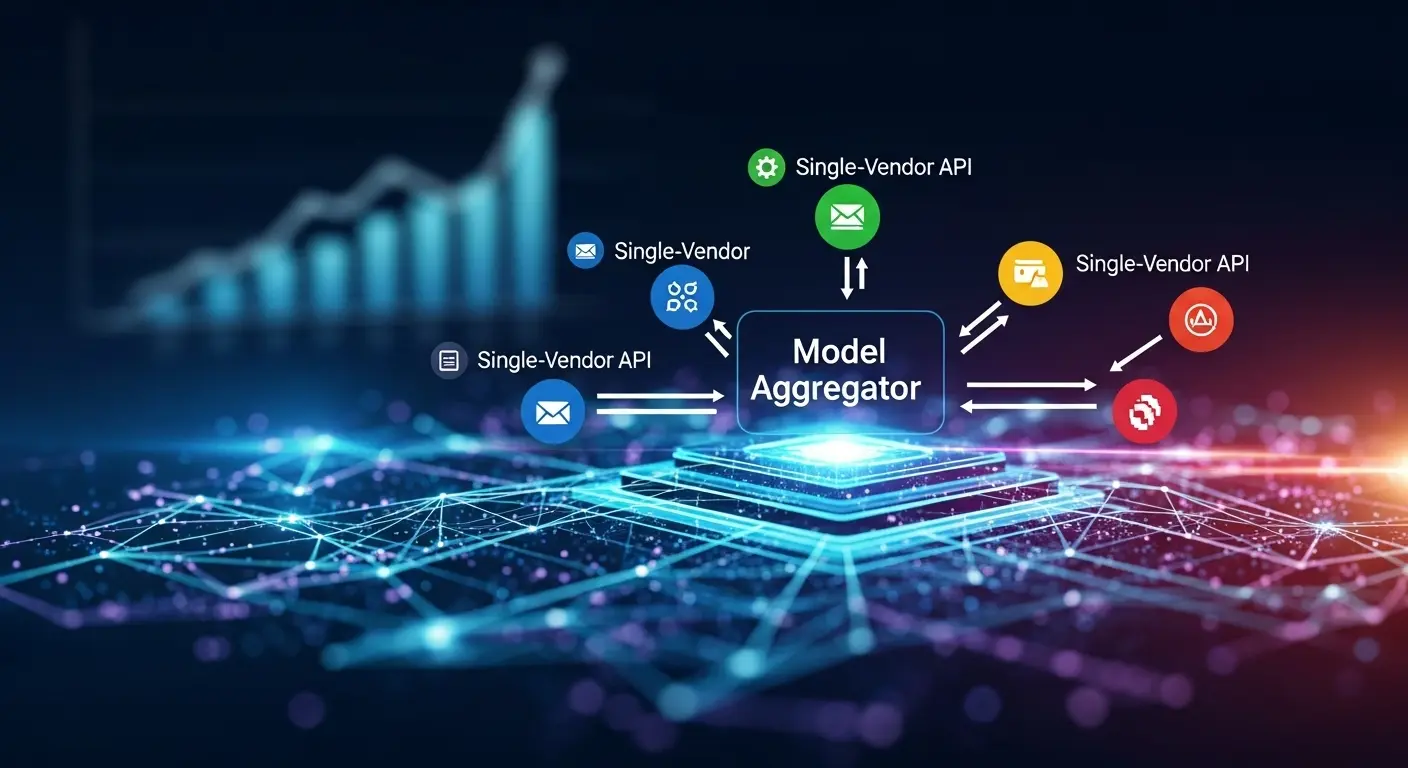

That's why increasingly startups are making the change. Instead of hardwiring their stack to a single vendor, they're turning to model aggregators—software platforms that offer single access to multiple AI APIs via a single interface.

Model aggregation simplifies it all. Developers can test, compare, and swap models without having to rewrite code or authenticate back and forth. It's faster to build, easier to scale, and more for long-term flexibility.

One such device, AI/ML API, allows teams to use 300+ models with none of the hassle of managing multiple keys or SDKs. For startups that prefer to reduce friction and unlock greater value in generative AI models, this is quickly becoming the new standard.

What Is a Model Aggregator in the AI World?

An AI model aggregator is a platform that gives developers access to multiple best-in-class AI models through one consistent interface. Unlike single-vendor APIs that limit you to a specific provider’s stack, model aggregators act as a gateway—routing your requests to the most suitable model based on your needs.

You don’t host the models yourself. Instead, you send requests to the aggregator’s endpoint, and it handles the rest—whether you're calling an LLM, a vision model, or a speech recognizer. This approach dramatically reduces the time and effort required to experiment with and deploy different providers.

For example, a single-vendor AI API might only offer access to their proprietary LLM. In contrast, a model aggregator connects you to providers like Anthropic (Claude), Google (Gemini), Meta (LLaMA), and Mistral—all within one ecosystem.

AI/ML API is a prime example of this model. It doesn’t build its own generative AI models. Instead, it curates access to more than 300 models across leading vendors—standardizing the request format and making switching or combining models seamless.

This developer-first platform is especially valuable for teams who want to innovate quickly without committing to a single tech stack. With a unified AI API, developers can focus on delivering features while the aggregator handles the complexity of model orchestration behind the scenes.

The Risks of Relying on a Single Vendor API

Although having a single AI API provider is easier in the short run, it leads to long-term pain—especially for startups that need speed and constant experimentation.

Vendor lock-in is among the greatest risks. When you closely couple with just one provider's API, your entire workflow is tied to their infrastructure, pricing, and model quality. When the quality of their output diminishes or they raise prices, you're either locked in—or worse still, must restart from scratch.

And then there's the issue of limited model diversity. A single-vendor API tends to specialize in text models, so you're out of luck for vision or audio workloads. If you want to build truly multimodal experiences—bringing together language, images, and speech—you'll likely need additional integrations, each with its own SDKs and quirks.

Such constraints get in the way of experimentation. Experimenting with multiple generative AI models is a chore when each requires a different implementation. For agile teams, that friction is momentum-killer.

Why Aggregators Empower Faster, Smarter AI Startups

For AI startups, time is critical. Rapid capacity to develop, test, and iterate generally determines who's leading and who's lagging behind. That's why model aggregators are gaining traction—they offer single-point access to multiple AI APIs, helping startups move faster with fewer technical hurdles.

Perhaps the greatest advantage is consistency. Aggregators standardize input and output formats within models, which spares you the hassle of having to rewrite payloads or deal with provider-specific quirks. That sort of AI API simplicity enables you to plug in a model, validate it, and leave it there without bringing down the rest of your stack.

It also makes way for smarter experimentation. Need to compare Claude vs Gemini on a Q&A capability? An aggregator model enables you to input both through the same backend and A/B test responses with ease. This kind of model switching makes innovation real-time and product choices improved.

In addition to testing, aggregators support solid infrastructure features like batch requests, fallback, and model routing. Instead of building complex logic within, developers can rely on the platform to handle failovers and latency optimization automatically. That means better uptime, smoother performance, and easier scaling.

Equally important is observability. Aggregators offer centralized logs, usage tracking, and cost reports across all models. This allows teams to make informed decisions about inference optimization and resource allocation—without needing multiple dashboards or billing systems.

How a Unified AI API Simplifies Multimodal Model Integration

Early-stage startups need velocity. They don’t have time to wrangle multiple APIs, set up provider-specific SDKs, or deal with inconsistent inputs. That’s where AI/ML API changes the game. It gives teams instant access to over 300 multimodal AI models—all through a single, unified endpoint.

With AI/ML API, there’s no need to manage separate authentication keys, dashboards, or SDKs for every provider. Instead, developers call one consistent AI API—whether they’re generating text with Claude, reasoning over inputs with Gemini, or transcribing audio using Whisper. This drastically shortens integration time and reduces setup friction.

Even better, switching between models is seamless. Want to compare Gemini’s reasoning with Mistral’s speed? No backend rewrite required. The fast AI integration layer handles routing behind the scenes. This flexibility makes it ideal for experimentation—especially in use cases that combine modalities like voice input, image captioning, and multi-turn chat.

AI/ML API supports leading models including Claude, Gemini, Mistral, LLaMA, and others. Whether your product needs a lightweight summarizer, a powerful LLM, or a model that runs locally for privacy, you’ll find it all in one place. You can even combine them in a single flow—without worrying about model-specific syntax or formatting.

For startups prototyping new features or building MVPs, this unified access means fewer distractions, faster cycles, and more room to innovate. AI/ML API becomes your AI API startup toolkit, empowering developers to build and test ideas in hours—not weeks.

Real-World Use Cases: What Startups Are Building with Aggregators

Model aggregation isn’t just a backend convenience—it’s fueling real innovation across sectors. By connecting to multiple multimodal AI models through a unified API, startups can move faster and iterate smarter.

For example, AI assistants are becoming more capable by combining Whisper for speech-to-text with Claude for conversational reasoning. This enables voice-powered bots for customer support, onboarding, or real-time tutoring—all deployable in hours using a platform like AI/ML API.

In research and analysis, teams are leveraging Gemini’s vision and language capabilities to process visual data, extract key information, and summarize findings. With everything accessible through a single API, the workflow is simpler and more scalable.

Privacy-conscious developers are using local deployments of LLaMA for sensitive tasks while routing general queries through hosted models like Claude. This hybrid setup balances control, compliance, and performance.

Vertical-specific startups—in legaltech, medtech, and fintech—are also running A/B tests across multiple generative AI models to pinpoint which one excels at their unique challenges. Without aggregation, this would require days of integration effort. With it, switching between models takes minutes.

Whether it's voice, vision, or deep reasoning, startups are using model orchestration to get the best results—without managing five separate APIs.

Conclusion: Aggregators Are the Future of the AI Stack

As AI startups speed up and grow across modalities, model aggregation is becoming the cornerstone of smart development. Instead of locking into a single AI API provider, teams are choosing flexibility, performance, and velocity.

Platforms like AI/ML API make this shift possible by giving united access to leading AI models—in this instance, Claude, Gemini, LLaMA, and Mistral—through one developer-friendly interface. No more key switching, endpoint rewriting, or vendor roadblocks.

Whether you are building a chatbot, a vision pipeline, or a full-stack AI product, aggregation gives you the power to test, scale, and ship faster.

Try model ensemble with AI/ML API and enjoy easy access to 300+ models—minus the fuss.

Leave your comment

- No comments yet.

Recommended AI Tools

Carefully selected AI tools to improve your work, study, and live efficiency.

Related Articles

Standing at this moment in 2025, when we look back at the development journey of artificial intelligence, we witness how this revolutionary technology has reshaped every aspect of human society. From initial theoretical concepts to today's practical applications, each step forward in AI technology has changed the way we live. Let's revisit this fascinating journey together.

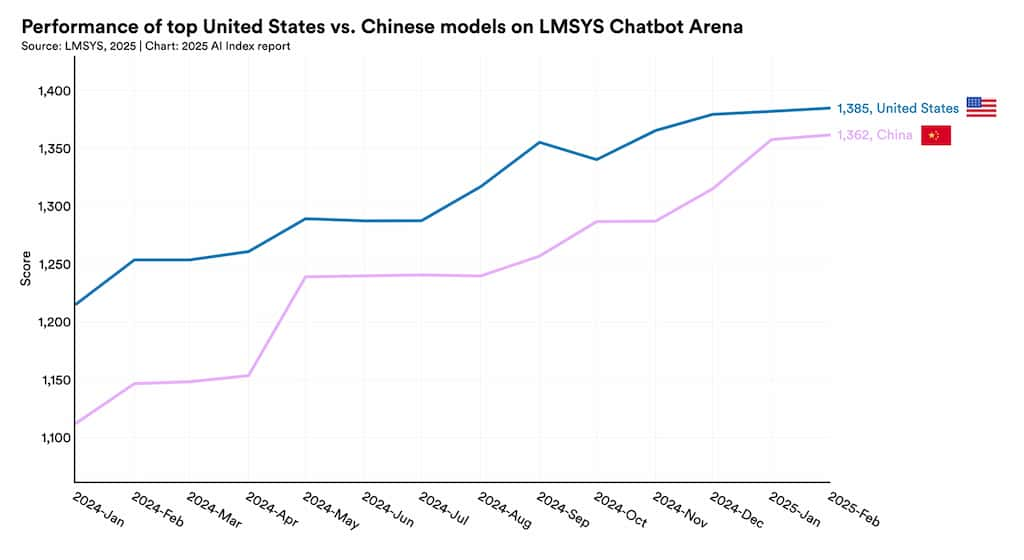

In 2024 and early 2025, the field of artificial intelligence (AI) achieved remarkable progress, with its impact spanning across various industries. AI models demonstrated significant performance improvements in multiple benchmarks, marking a new level of capability in handling complex tasks <sup>[1]</sup>. From healthcare to transportation, AI is integrating into daily life at an unprecedented pace <sup>[1]</sup>. Business adoption and investment in AI also showed strong growth, particularly in generative AI <sup>[1]</sup>. The United States maintained its lead in AI model development, but China is rapidly closing the quality gap <sup>[1]</sup>. Meanwhile, the responsible AI ecosystem continues to evolve, with increasing attention to ethical considerations and regulation <sup>[1]</sup>. Global optimism about AI has risen overall, though regional differences persist <sup>[1]</sup>.

Gemini CLI is an open-source AI agent that brings Gemini directly into your terminal, with MCP support for extensibility and Human in the Loop for oversight. Individual developers get unmatched usage limits at no cost.